Concept

A Virtual Reality(VR) application for learning rhythm using hand-tracking and 4 tangible pads for hand drumming.

Context

This project was developed during a course called Design for Emerging Technologies, where the focus was on VR and Tangible Interactions.

The project brief included the following constraints:

- Learning experience

- Virtual Reality

- Hand Tracking

- External tangible component of physical computing

Inquiry

Learning and keeping rhythm is a basic and crucial element of music that can be challenging for beginners, and traditional methods of teaching can sometimes be boring.

Our team's objective was to see - Can VR be a medium to learn musical rhythm?

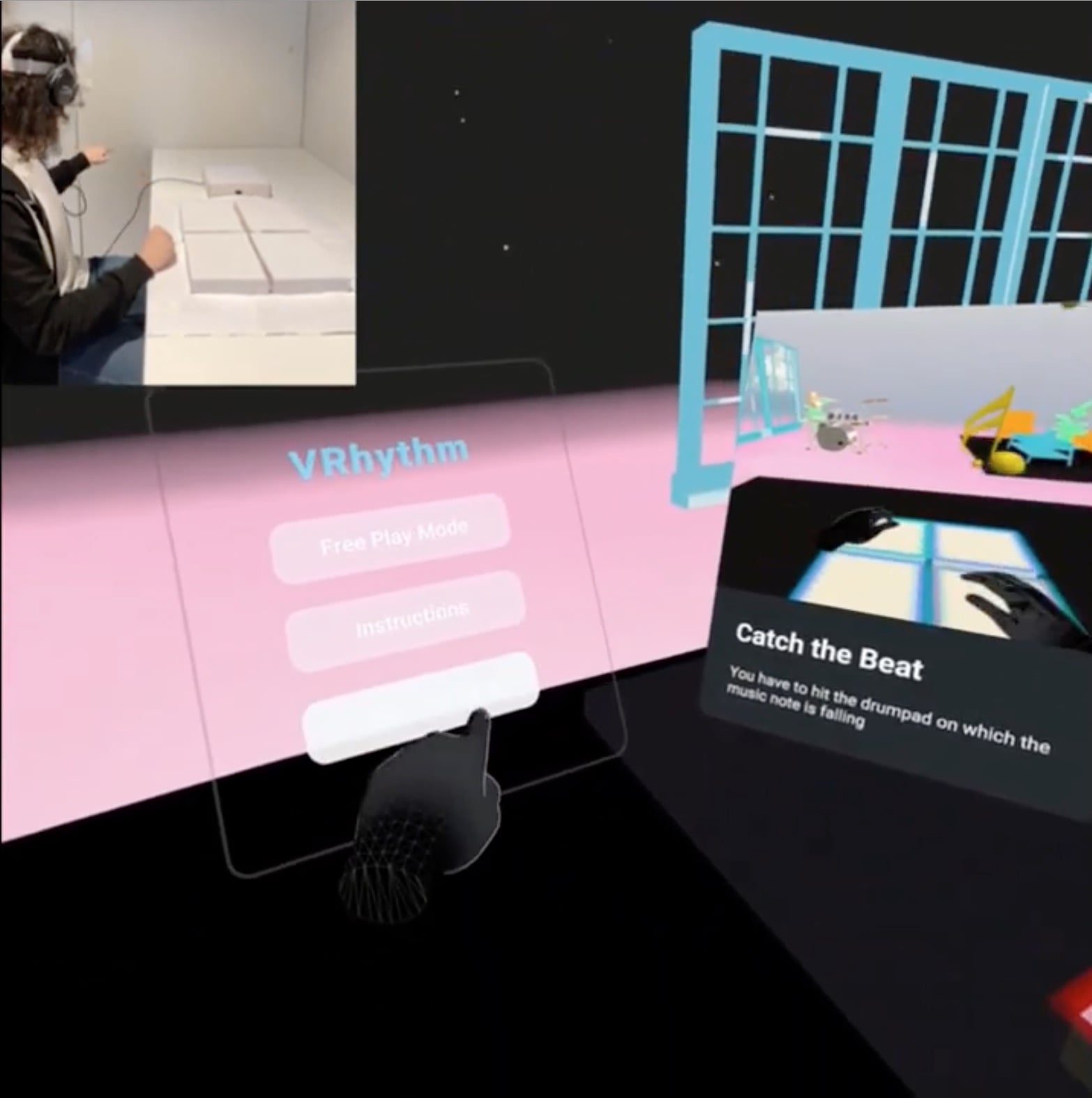

Two learning modes were offered: Free Play and Guided Learning. In Free Play, the user could play around freely, while in Guided Learning the user got a guided experience for learning to keep a rhythm.

Learning modes were based on research by Lucy Greens (2002), according to her, formal music education should follow five practices, including allowing the player to choose the music themselves, learning rhythm by listening to and copying,

learning in groups of friends, learning in personal random ways and integrating listening, playing and composing.

Challenges

We came across various types of challenges, as mentioned below:

Application

- What learning mode should be prioritized?

- What degree of complexity should be incorporated while learning percussion?

- Which environment would keep learning and engagement high?

Technology

- How to connect VR with external sensors?

- How to colocate the physical sensors for VR?

Interactions and Interface

- What physical materials and sensors will provide a better playing feedback?

- How to utilise hand-tracking alongside of physical playing?

- What visual and audio feedback will keep the learning experience coherent?

Technology

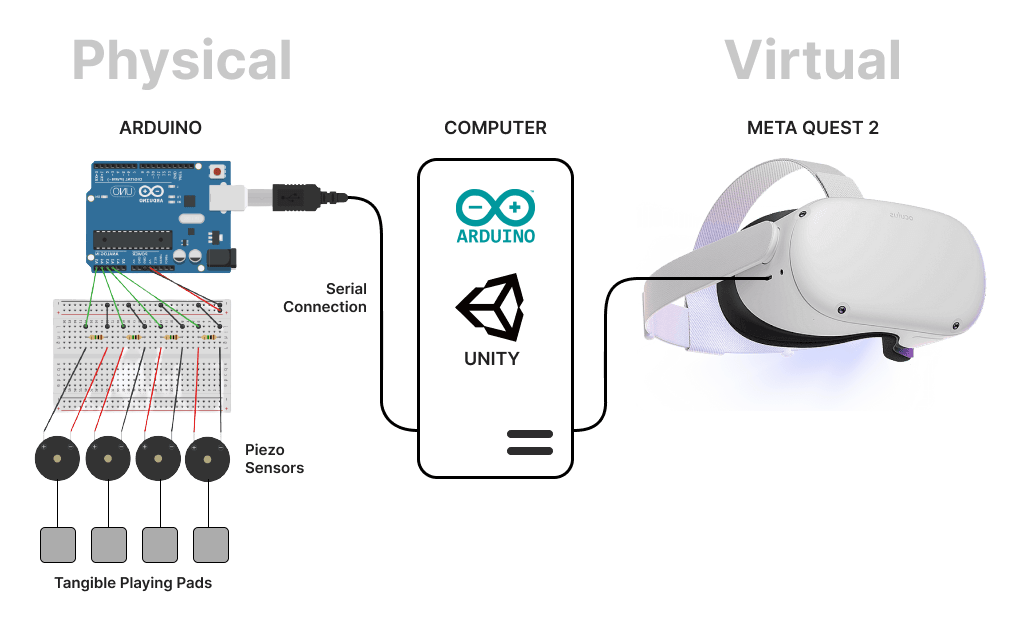

The project was primarily executed using the Unity game engine. Unity allowed setup and integration of the VR environment, for the visuals and the audio.

4 Piezoelectric sensors were connected to an Arduino. The sensors values were sent from the Arduino to Unity using a Serial connection.

The headset used was a Meta Quest 2 and the VR scene with hand-tracking was created in Unity using the Oculus Integration SDK.

Technical Design

The physical computing involved sending signals from the sensor to Unity, while detecting single and multiple hits. It was also important to determine the hit intensity for a player.

For VR, Unity needed to play sounds for received values, from the Arduino. The sounds need to be played only once. Detect hand-interactions, provide audio visual feedback. Control looping of sound, and scene changes.

Experience Design

The experience was intended to stimulate engagement and learning. The target audience for this app are the individuals in the age group of 15 to 30.

For physical playing, comfort from the physical ergonomics was considered - seating position, height, pad locations etc.

For the tangible interaction, many surfaces(materials) were tried to check the tactile response when hitting with hands. 3 sensors were tried, Force Sensitive, Light based and Vibrations based to determine the best response for playing.

For an immersive experience, the difficulty of playing was assessed, to ensure it stays challenging, and is neither too easy nor too difficult. The visuals were also kept simple and distraction free.

For a guided learning experience, visual cues are provided in terms of instructions, and incoming notes.

The player also receives audio visual feedback in VR, in addition to interacting with the pads to form an active relationship with the app.

For learning modes, seamless switching between the two play modes(scenes) was enabled by an easy to use VR menu.

Interactions

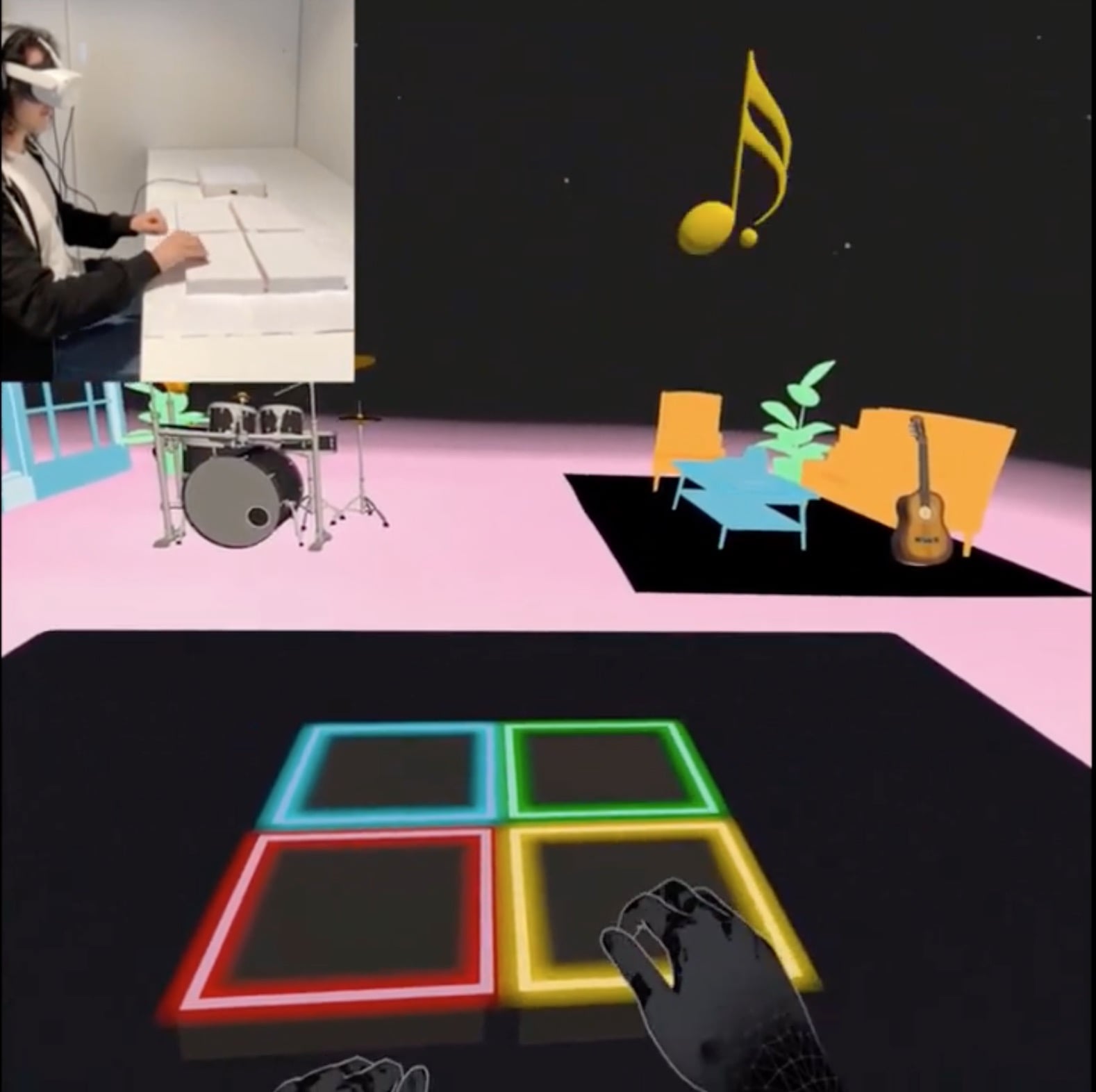

The VR experience uses 2 interactions; Tap and Poke, and these are used to facilitate the interactions with pads for playing and the menu.

TAP

Interaction used for playing on the pads.

POKE

Interaction used for selecting menu items.

Visual Design

The dark environment keeps the player focussed on the highlighted areas. The ambient elements facilitate a mood for music.

All the pads have different stroke(boundary) colors, being associated with different sounds. The sound notes have the same color as the respective pad.

The pads create a visual feedback when registering a tap. Similarly the menu item, also provide the visual feedback.

Audio Design

The percussion sounds are for 4 types of drum sounds; Snare, Kick, Hi-Hat and Crash.

In the Guided learning mode, the missed beat sound indicates a note missed by the player.

The menu items when poked, provided an audio feedback.

Timeline, Team and Mentors

The project was conceptualised and completed between 10 Feb 2023 to 17 Mar 2023.

The team members included - Fazeleh D A, Joakim W, Karan D (self), Kiran S, Leo K.

My role in the team, I was in charge of:

- Experience Design

- Technical Design - Tangible Interaction

- Unity optimisation

I wanted to work on the Unity VR development, however since I was the only team member who could perform the physical computing tasks, I initially took the role to establish the tangible aspects of the project. Later, I supported the Unity development and optimisation of the app.

The project was mentored by Prof. Jordi Solsona Belenguer, Prof. Asreen Rostami, Maurice Schleussinger, Luis Eduardo Velez Quintero and Paul Victor Vinegren.

Outcome

The potential value provided by this project:

- Learning of Rhythm

- Playing modes ; Practice and Experimentation

- Engaging VR environment

- Feedback loop ; Audio and Visual

- Hand-Eye-Sound coordination

- Progressive difficulty

Learning

The significant take-aways were:

- Sensor fidelity and delay affects the experience.

- Visual design impacts head-movement.

- Guided and Playful learning, both help in different ways.

References

- Lucy Green. How popular musicians learn: A way ahead for music education. International Journal of Music Education, 24(2):103 - 120, 2002.

Full report of this project is available on this link.